Cohere's Command and Command Light

Note

For most use cases we recommend our latest model Command A instead.

Model Details

The Command family of models responds well with instruction-like prompts, and are available in two variants: command-light and command. The command model demonstrates better performance, while command-light is a great option for applications that require fast responses.

For information on toxicity, safety, and using this model responsibly check out our Command model card.

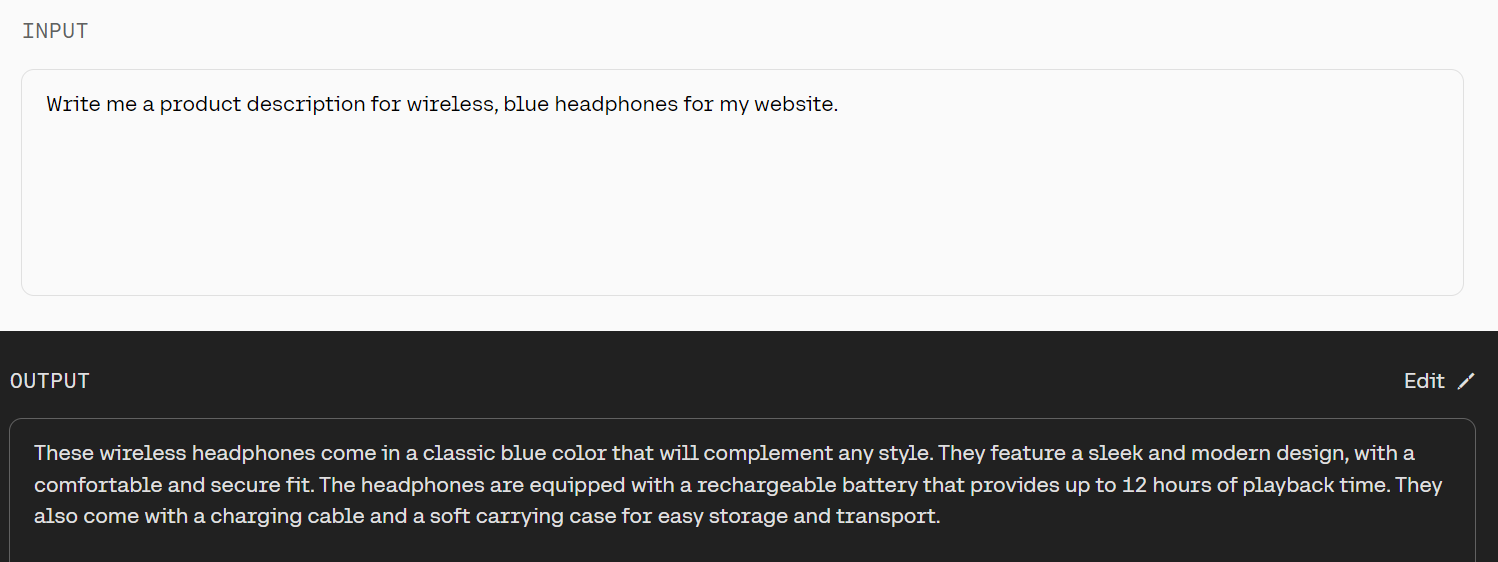

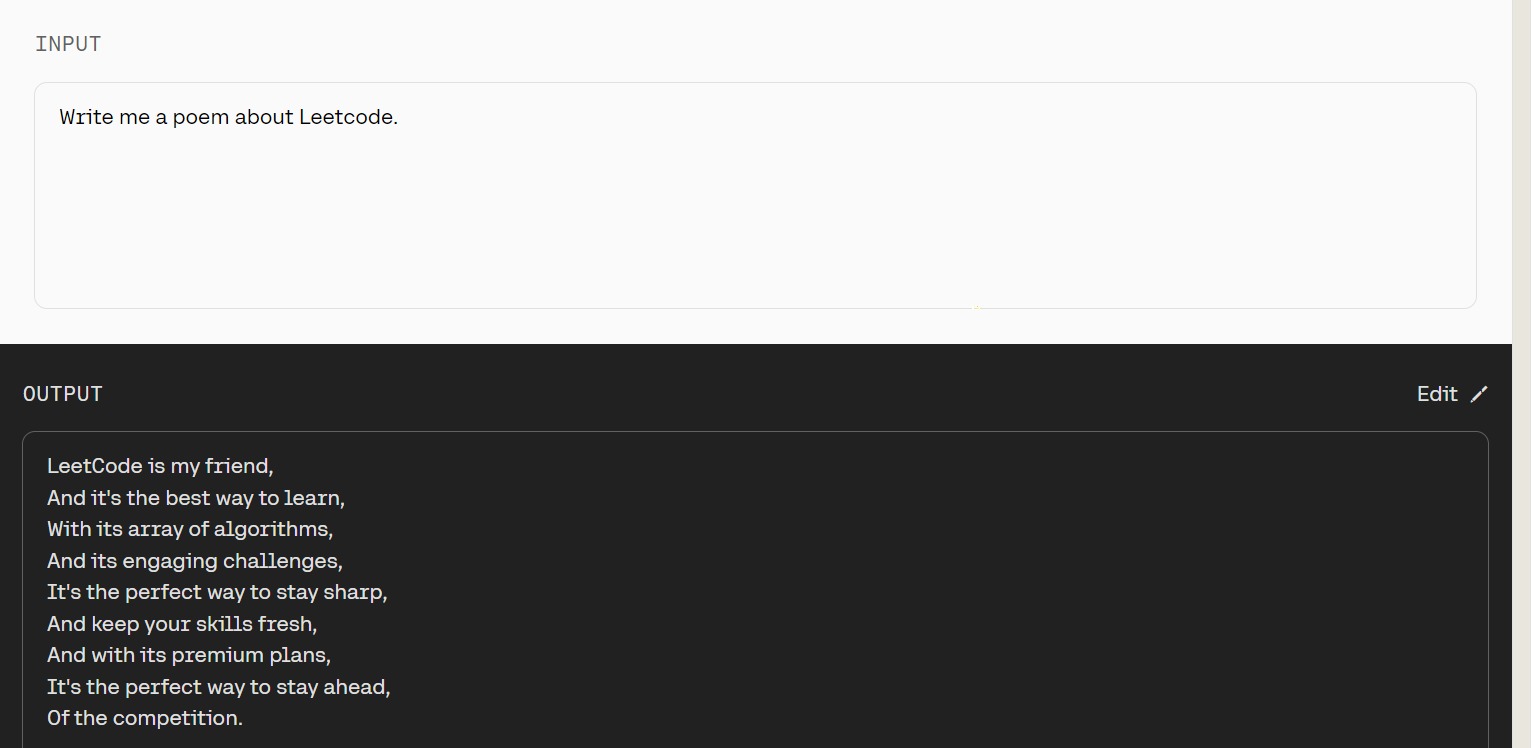

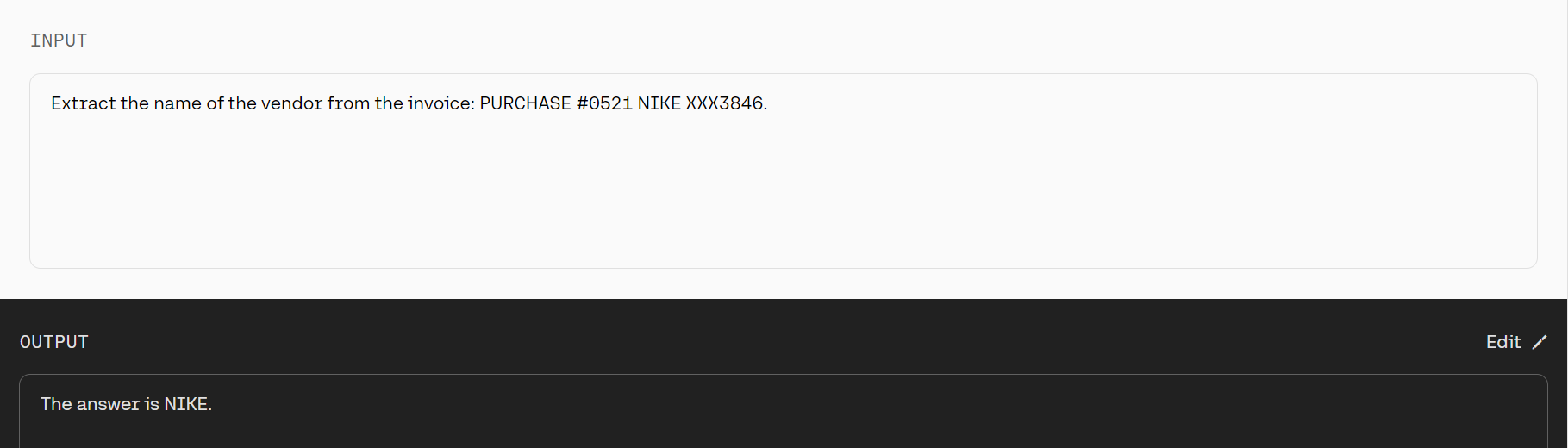

Example Prompts

FAQ

Can users train Command?

Users cannot train Command in OS at this time. However, our team can handle this on a case-by-case basis. Please email team@cohere.com if you’re interested in training this model.

Where can I leave feedback about Cohere generative models?

Please leave feedback on Discord.

What’s the context length on the command models?

A model’s “context length” refers to the number of tokens it’s capable of processing at one time. In the table above, you can find the context length (and a few other relevant parameters) for the different versions of the command models.