A Deeper Dive Into Semantic Search

In this chapter, you’ll dive deeper into building a semantic search model using the Embed endpoint. You’ll use this model to search for answers in a large text dataset.

Colab Notebook

This chapter comes with a corresponding Colab notebook, and we encourage you to follow it along as you read the chapter.

For the setup, please refer to the Setting Up chapter at the beginning of this module.

Introduction

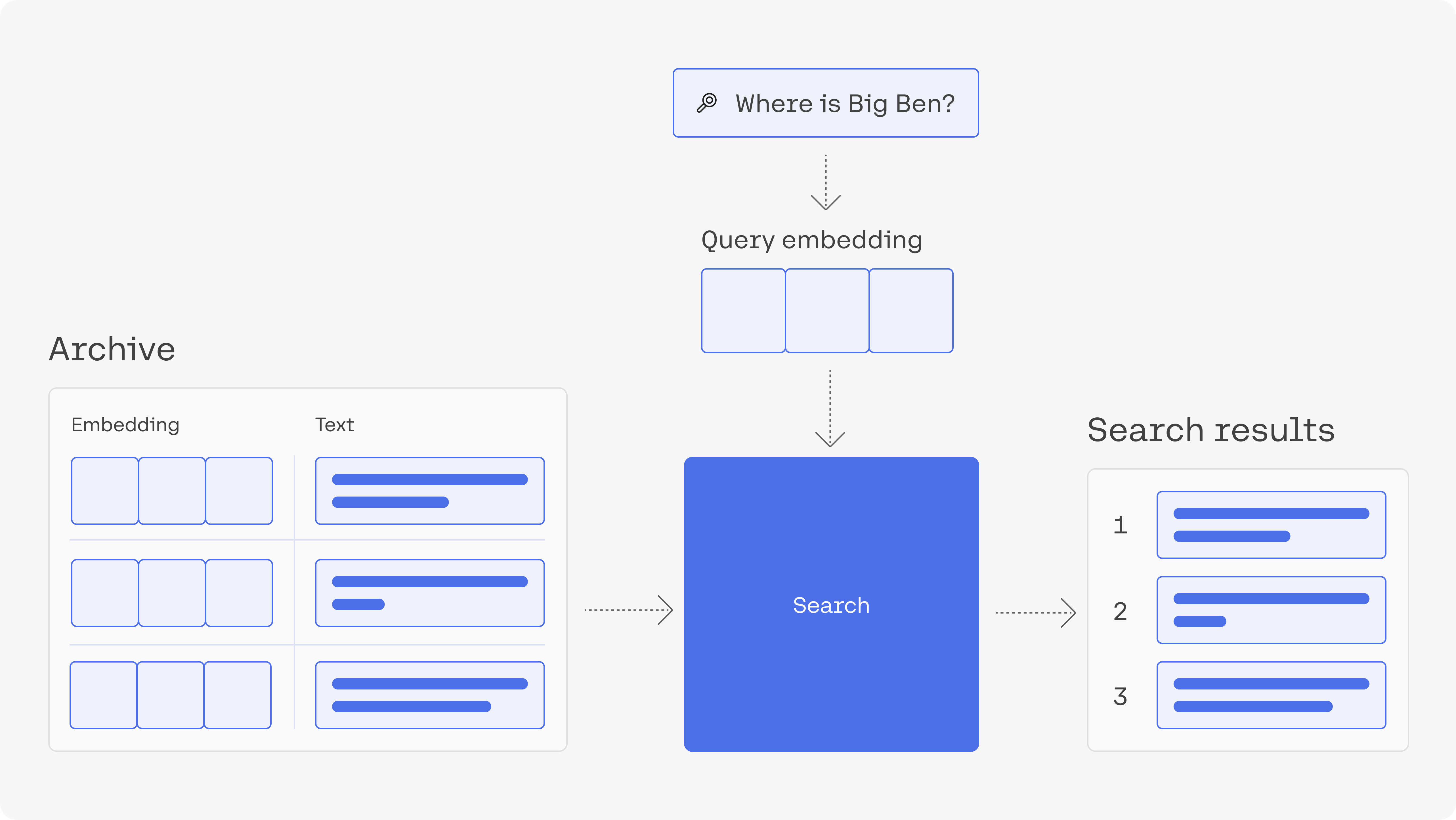

In module 2, you learned about semantic search, and then in a previous chapter in this module, you built a simple semantic search model using text embeddings. In this chapter, you’ll build a similar semantic search model in a much larger dataset, which is made up of questions. Since the dataset is larger, we’ll use a tool that will speed up the nearest neighbors algorithm here.

As you’ve seen before, semantic search goes way beyond keyword search. The applications of semantic search go beyond building a web search engine. They can empower a private search engine for internal documents or records. It can be used to power features like StackOverflow’s “similar questions” feature.

Contents

- Get the archive of questions

- Embed the archive

- Search using an index and nearest neighbour search

- Visualize the archive based on the embeddings.

1. Download the Dependencies

2. Get the Archive of Questions

We’ll use the trec dataset which is made up of questions and their categories.

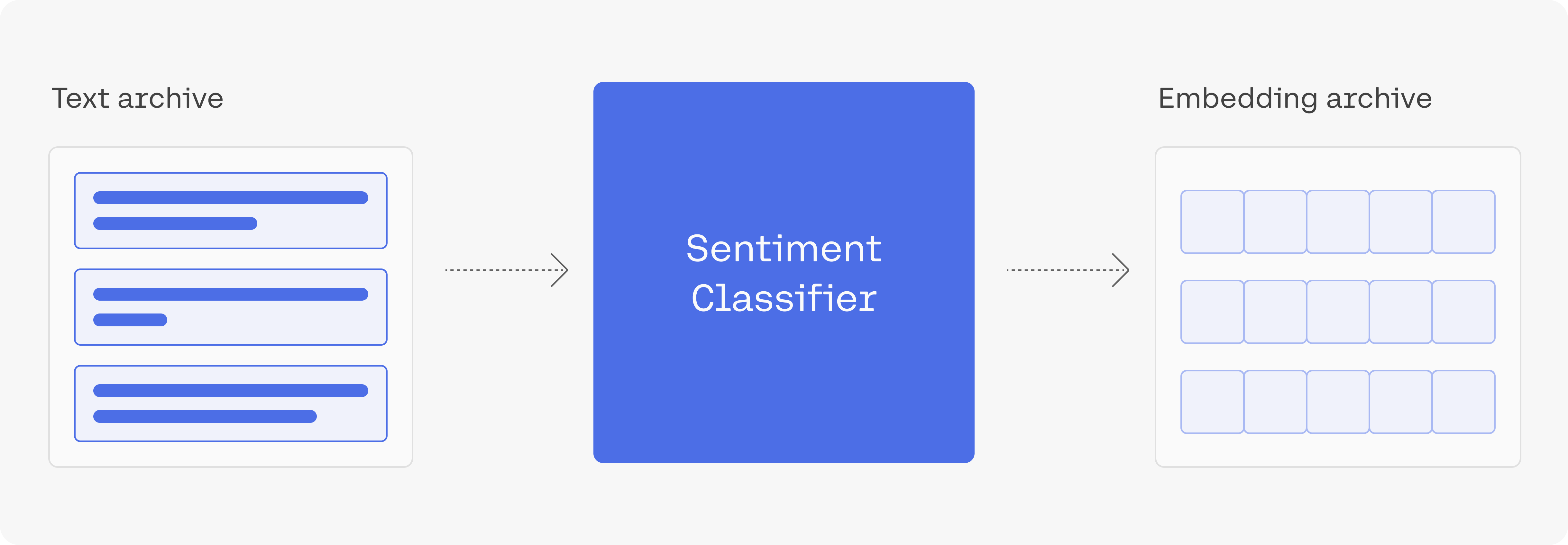

3. Embed the Archive

Let’s now embed the text of the questions.

To get a thousand embeddings of this length should take a few seconds.

4. Build the Index, search Using an Index and Conduct Nearest Neighbour Search

Let’s build an index using the library called annoy. Annoy is a library created by Spotify to do nearest neighbour search; nearest neighbour search is an optimization problem of finding the point in a given set that is closest (or most similar) to a given point.

After building the index, we can use it to retrieve the nearest neighbours either of existing questions (section 3.1), or of new questions that we embed (section 3.2).

4a. Find the Neighbours of an Example from the Dataset

If we’re only interested in measuring the similarities between the questions in the dataset (no outside queries), a simple way is to calculate the similarities between every pair of embeddings we have.

4b. Find the Neighbours of a User Query

We’re not limited to searching using existing items. If we get a query, we can embed it and find its nearest neighbours from the dataset.

5. Visualize the archive

Conclusion

This concludes this introductory guide to semantic search using sentence embeddings. As you continue the path of building a search product additional considerations arise (like dealing with long texts, or training to better improve the embeddings for a specific use case).

Original Source

This material comes from the post Semantic Search