Basic usage of tool use (function calling)

Overview

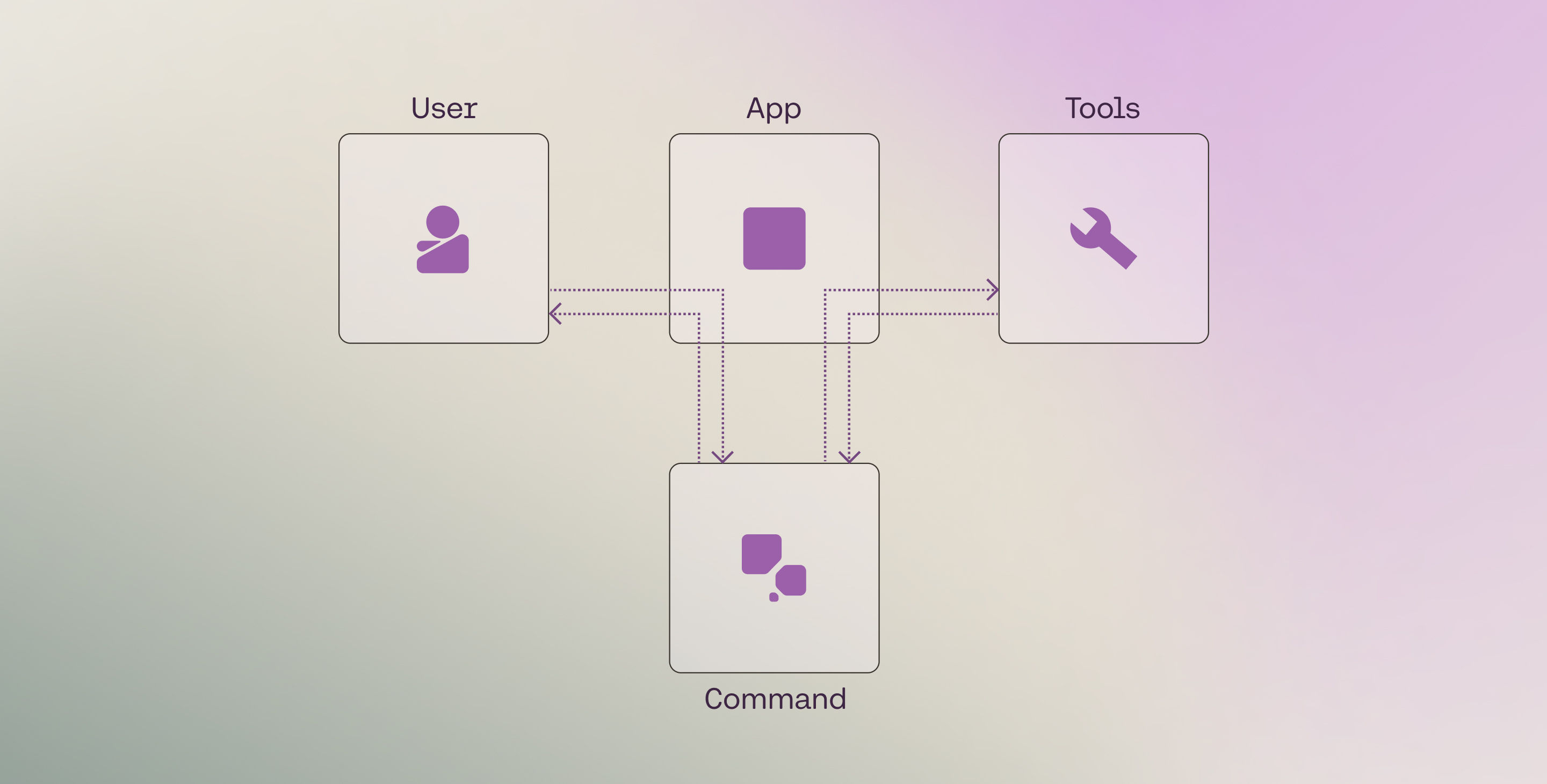

Tool use is a technique which allows developers to connect Cohere’s Command family of models to external tools like search engines, APIs, functions, databases, etc.

This opens up a richer set of behaviors by leveraging tools to access external data sources, taking actions through APIs, interacting with a vector database, querying a search engine, etc., and is particularly valuable for enterprise developers, since a lot of enterprise data lives in external sources.

The Chat endpoint comes with built-in tool use capabilities such as function calling, multi-step reasoning, and citation generation.

An end-to-end example using tool use to search docs

This is a complete, minimal example that shows one full tool use “round trip”: the model gets a message from a user, generates a tool call, gets tool results, and responds with citations.

In the sections below, we’ll walk through the steps in the tool-use loop, beginning with set up.

Setup

First, import the Cohere library and create a client.

Cohere platform

Private deployment

Tool definition

Before we can run a tool use workflow, we have to define the tools. We can break this process into two steps:

- Creating the tool

- Defining the tool schema

Creating the tool

A tool can be any function that you create or external services that return an object for a given input. Some examples:

- A web search engine

- An email service

- A SQL database

- A vector database

- A documentation search service

- A sports data service

- Another LLM.

In this walkthrough, we define a search_docs function that returns relevant documentation snippets for a given query. You can implement any retrieval logic here, but to simplify, we’ll return a few hardcoded documents.

The Chat endpoint accepts a string or a list of objects as the tool results. Thus, you should format the return value in this way. The following are some examples.

Defining the tool schema

We also need to define the tool schemas in a format that can be passed to the Chat endpoint. The schema follows the JSON Schema specification and must contain the following fields:

name: the name of the tool.description: a description of what the tool is and what it is used for.parameters: a list of parameters that the tool accepts. For each parameter, we need to define the following fields:type: the type of the parameter.properties: the name of the parameter and the following fields:type: the type of the parameter.description: a description of what the parameter is and what it is used for.

required: a list of required properties by name, which appear as keys in thepropertiesobject

This schema informs the LLM about what the tool does, and the LLM decides whether to use a particular tool based on the information that it contains.

Therefore, the more descriptive and clear the schema, the more likely the LLM will make the right tool call decisions.

In a typical development cycle, some fields such as name, description, and properties will likely require a few rounds of iterations in order to get the best results (a similar approach to prompt engineering).

Here’s an example:

Tool use workflow

At a high level, there are four steps in the core tool-use loop:

- Step 1: Get user message: A user asks, “How does tool use work in Cohere? Please cite your sources.”

- Step 2: Generate tool calls: A tool call is made to a documentation search tool with something like

search_docs("tool use Cohere"). - Step 3: Get tool results: The tool returns relevant documentation snippets (documents).

- Step 4: Generate response and citations: The model provides the answer grounded in those snippets, with citations.

The following sections go through the implementation of these steps in detail.

Step 1: Get user message

In the first step, we get the user’s message and append it to the messages list with the role set to user.

System message

Optional: If you want to define a system message, you can add it to the messages list with the role set to system.

Step 2: Generate tool calls

Next, we call the Chat endpoint to generate the list of tool calls. This is done by passing the parameters model, messages, and tools to the Chat endpoint.

The endpoint will send back a list of tool calls to be made if the model determines that tools are required. If it does, it will return two types of information:

tool_plan: its reflection on the next steps it should take, given the user query.tool_calls: a list of tool calls to be made (if any), together with auto-generated tool call IDs. Each generated tool call contains:id: the tool call IDtype: the type of the tool call (function)function: the function to be called, which contains the function’snameandargumentsto be passed to the function.

We then append these to the messages list with the role set to assistant.

Example response:

By default, when using the Python SDK, the endpoint passes the tool calls as objects of type ToolCallV2 and ToolCallV2Function. With these, you get built-in type safety and validation that helps prevent common errors during development.

Alternatively, you can use plain dictionaries to structure the tool call message.

These two options are shown below.

Python objects

Plain dictionaries

Directly responding

The model can decide to not make any tool call, and instead, respond to a user message directly. This is described here.

Parallel tool calling

The model can determine that more than one tool call is required. This can be calling the same tool multiple times or different tools for any number of calls. This is described here.

Step 3: Get tool results

During this step, the actual function calling happens. We call the necessary tools based on the tool call payloads given by the endpoint.

For each tool call, we append the messages list with:

- the

tool_call_idgenerated in the previous step. - the

contentof each tool result with the following fields:typewhich isdocumentdocumentcontainingdata: which stores the contents of the tool result.id(optional): you can provide each document with a unique ID for use in citations, otherwise auto-generated

Step 4: Generate response and citations

By this time, the tool call has already been executed, and the result has been returned to the LLM.

In this step, we call the Chat endpoint to generate the response to the user, again by passing the parameters model, messages (which has now been updated with information from the tool calling and tool execution steps), and tools.

The model generates a response to the user, grounded on the information provided by the tool.

We then append the response to the messages list with the role set to assistant.

Example response:

It also generates fine-grained citations, which are included out-of-the-box with the Command family of models. Here, we see the model generating two citations, one for each specific span in its response, where it uses the tool result to answer the question.

Example response:

Multi-step tool use (agents)

Above, we assume the model performs tool calling only once (either single call or parallel calls), and then generates its response. This is not always the case: the model might decide to do a sequence of tool calls in order to answer the user request. This means that steps 2 and 3 will run multiple times in loop. It is called multi-step tool use and is described here.

State management

This section provides a more detailed look at how the state is managed via the messages list as described in the tool use workflow above.

At each step of the workflow, the endpoint requires that we append specific types of information to the messages list. This is to ensure that the model has the necessary context to generate its response at a given point.

In summary, each single turn of a conversation that involves tool calling consists of:

- A

usermessage containing the user messagecontent

- An

assistantmessage, containing the tool calling informationtool_plantool_callsidtypefunction(consisting ofnameandarguments)

- A

toolmessage, containing the tool resultstool_call_idcontentcontaining a list of documents where each document contains the following fields:typedocument(consisting ofdataand optionallyid)

- A final

assistantmessage, containing the model’s responsecontent

These correspond to the four steps described above. The list of messages is shown below.

The sequence of messages is represented in the diagram below.

Note that this sequence represents a basic usage pattern in tool use. The next page describes how this is adapted for other scenarios.